Do you play every note ahead of the beat or only first ones?

Because that’s what I do when playing fast passages: start them a bit earlier and then finish on the beat )

I tried to get rid of that habit, but then I realized that it’s not that audible, and… I like it more that way sometimes.

Though when I try to play some passage in a robotic-like fashion I start with downstrokes synced with a metronome. Like: ta ta ta ta tadatadatadatada… )

Well I think you guys are correct with the latency, thanks @Tom0711 @tommo . I tried guitar direct into Focusrite Scarlet 2i4 and into Logic Pro X. Notes are ahead of the beat. Tried all the low latency mode/ low buffer etc. Then tried Axe fx usb to Logic Pro and get same notes ahead of beat.

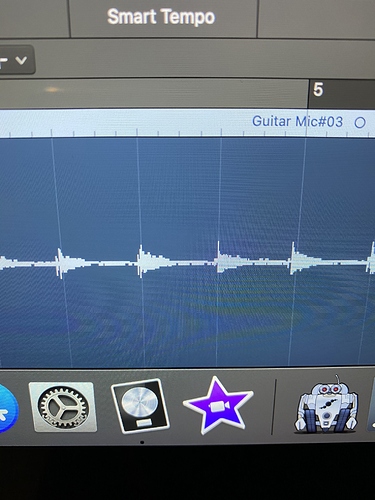

Finally tried usb mic and guitar unplugged. Timing near enough on point. Must be a latency issue. Here is screenshot of mic recording unplugged guitar.

Sounds good, problem identified

I don’t know Logic Pro.

I’m using Ardour on Linux and the latency Parameters are identified by ardour using a cable between sound output and input. Ardour then plays an impulse, step or whatever to measure the latency of output + input.

The recorded audio is then shifted by this value to compensate for the latency.

I’m sure there is some Setting in Logic to be adjusted or automatically measured also. But maybe someone else using Logic can help you.

Good luck.

A lot of good advice here already!

Regardless of any DAW, the Source of roundtrip latency is the selected buffer size. If I recall correctly:

Preferences>Audio>general

(for logic)

A very small buffer size like 64 samples gives you a barely noticeable latency, but it is taxing on the cpu, especially if you are playing through a modern amp sim like bias amp with an IR cab sim. The bigger the buffer, the bigger the latency. 1024 is unplayable, but you would have noticed this even before you recorded anything. If you don’t use the amps in logic or any other plugins to play through, the best way to go is direct Monitoring your audio interfaces analog Input, because it has zero latency, and you can use bigger buffers, which makes the DAW run smoother and less likely to drop out during your best take.

EDIT:

And about ardour: most of the “big” daws calculate the roundtrip latency anyway, because they do that compensation, some also post the calculated value beside the buffer size (live, and I think Logic?) So no need for that with logic

That’s almost correct

The buffer size is a crucial part of the overall latency. So is usb-latency and some other small components, so it depends on your overall system configuration including hardware.

I analyzed my “live rig”

native Instrumtents Audio Kontrol 1

thinkpad 420s

ubuntu linux

jack audio server

guitarix

While the buffer size in jack states something around 2.33 ms the measured roundtrip latency is something around 6 ms. totally playable, but ~3 ms more than the calculated buffer value. So measuring is definitely the best way to determine the roundtrip latency.

I might look into my system setup a little deeper to see if I can improve that a little more once I’m bored. Definitely not by the buffer size settings. Tried → didn’t work.

Yes, using amp simulations on the same machine coudl also lead to “playing ahead of the beat” because you might unconsciously compensate for the latency.

You engineer, you!

You are certainly correct. MIDI for example has its own latency as well, but I just wanted to give a simple solution for this input latency thing.

I wonder though, if the latency causes an issue that is audible after recording, it should be noticeable during the recording as well.

Edit: about the other stuff you describe, concerning latency, I think its negligible, as long as roundtrip latency doesn’t go above 10 ms.

BUT, as I said, I would always recommend to Monitor the analog input of your audio interface because no latency

I plead guilty.

Well, there is of course the output latency. You hear the recorded material delayed by output latency, then you play. At this point, everything is in perfect sync, as both signals are in the same time domain (waiting for physics critique by tommo ;). Then input latency is added and both together delays your recorded material to the stuff you have been playing to, so the DAW should shift your new recording to “the left” by the overall roundtrip latency and thus it should all be in sync as long as the latency settings are correct.

10 ms is 2m air distance, so that should not be recognizable. Of course it is an extra 2m, so go for headphones.

No problem as long as you record acoustic or a mic´d amp. If you use amp simulation, that doesn´t work. But it`s a good way to tell if the latency is recognizable. If you hear the clean electric guitar before the amp sim result → it is.

Thomas

Keep in mind that samplerate is important too.

Eg 44100bps with 512 bytes buffer gives you 11ms of pure latency (plus latency from hardware, drivers etc), while 192000bps with 512b buffer gives you 2.6ms. So my advice is to use as high samplerate as possible even if it’s actually a hardware upsampling (which is a common thing for budget audiocards).

Hey @weealf, i think this whole latency thing is going a bit beyond your original question. What I meant originally was, is this:

if your latency is too high, you will hear yourself later than you actually played. This might cause you to subconciously compensate and play early. But, this would be recognizable while playing as well, if you concentrate on it.

I am quite curious what the actual cause and solution is. Any luck figuring it out?

That’s still ahead of the beat – I’m more of a Pro Tools guy, but if I had to guess, there are some there that look 5-10 ms ahead.

The place to be for pretty much every guitar part in reference to the grid is maybe a little behind, maybe right on, but (basically) never ahead, not in the various styles that most up us listen to (which is 95% of the stuff that’s not maybe bebop or something). I’ve been in a TON of sessions, and – I don’t say “never” a whole lot – I have never heard a producer, player, or artist say, “can you slide that rhythm part so it’s slightly ahead (of the grid, beat, etc.)?” But it’s VERY routine to be asked to “lay back” something.

Not to get all, “back in my day, we used to walk to school…in the snow…uphill…BOTH WAYS!” on you, but the quickest way to learn this is to be in a situation where, if the groove “feels” right, you’ll get called back, and if it doesn’t, you won’t be able to pay rent or put gas in the tank. At that point, everything is on the line, and you have to make judgments while keeping a smile on your face – how’s the tone? Is the part you’re playing right? Where exactly is the beat, and how much swing should there be? Should I suggest doubling, or should I wait for the producer to suggest it, or should this part NOT be doubled? So, in lieu of some kind of wish for “slow (metaphorical) death,” like “going into engineering as a sole profession” (where, these days, you will quickly realize that Bad Bunny has more current relevance to your clients than “Eddie Van WHO?”), I’d say that your mission, should you choose to accept it, is to discover how to hold yourself to the kind of standard that YOU respect, basically. The best way I can verbalize it is maybe a thought experiment: if you made a compilation playlist from some album tracks in the same/similar genre or something (imagine a “movie soundtrack”), you stuck your finished master in the middle, and you asked a cross-section of listeners (from kids to parents to pro musician/engineer types) to pick which of the five or so is NOT a broadcast-ready release from a serious artist/band/label, would most or all be able to pick yours? And none of this “I’ll pick the ONE stinker on the record so I can set the bar low…”

At that point, you’re making yourself accountable for making a “record,” period. I’m not even saying it’s something everyone should do or try – you’re basically saying to yourself, “can I be responsible for making something I would call a masterful piece of recorded music right now, or not?” And that also makes you accountable to the listening audience’s judgment (or let’s call it “choice”), which is really, truly, not what a lot of us are in it for (to acquire a chunk of the vast, general audience that chooses us in terms of being “more pleasing background music than the other thing” – remember, we overanalyze these 80’s solos, but they went platinum because people would buy the cassettes to crank up on a road trip or on the boat with a cooler full of, you know, ice and soda!). But when it’s your turn and it’s your “time to shine,” maybe you’ll remember that some ego-trip old-school engineer guy on what’s his name…“Trey something’s”…forum said that every now and then, there is no such thing as “good ENOUGH” – it’s either RIGHT, or it’s not!

I mean, I see a pic like that, and I have the urge to go CLICK, command-E, CLICK, command-E five times, F2, F6, click-click-click, F4, F8, click-drag (etc.), F6 click-click-click, then click-drag-command-F a few times…takes about 10 seconds (if you don’t think so, watch a teenager play Grand Theft Super MMA on PS5.23 Gold or whatever – that’s the speed you should be clicking those hotkeys with)…hit play, and, as my one buddy would say, “BAM, you’re making money!” Assuming there’s a kick and snare on that grid, tell me EVERYONE’S not hearing the front edge of the pick attack before the beat hits, especially on #1, 2, 4, and 5. It’s not a big deal – fix it, replay it, get it right, soak up the sound/feel of pocket playing, and then delete it and replay it. If your instincts are there, it might not even take that long to make a quantum leap with this. Depending on what the part is, I’d probably be putting it behind the grid just a little, by the way – maybe that’s a slide of 1-4 ms after gridding. Of course, the ideal is to play it in that way. But you don’t get a “gold star” or an “E” for effort – the end goal is to make it SOUND good.

By the way, if you REALLY want to teach yourself to play the stuff in the first time, do the fixing until you think it’s perfect, hard pan the part to the left, and record a double on the right. Listen to the two soloed. When you get it nailed so it sounds like one big wide guitar coming at you kind of from the center (and not something that “pulls” to one side or the other at moments depending on how you hit a certain note), then MUTE the original, fixed track, record another double to the natural one that you cut, and evaluate the two of THOSE together.

And I said in another post that Mustaine was on Headbanger’s Ball in the early 90’s saying how great it was to move waveforms on RADAR (before the Pro Tools revolution) to make a precise record when making “Rust In Peace.” JUDICIOUS fixing of audio is an absolutely legitimate part of a workflow that can make great records.!

The timing thing is my #1 gripe with digital recording. How technology didn’t end up at “direct monitoring” with sample-accurate timing on playback is beyond me. Somehow it wasn’t prioritized in the development shuffle.

The whole thing can (electronically) be accomplished with relays and a cheap monitor mixer. The only one who ever got it right IMO is Chris with CLASP CLASP Analog. This was marketed as a way to get tape sound into a Pro Tools workflow (and it worked brilliantly!), but the real advance was the auto-relaying in the hardware unit combined with the plug-in. So the relays split the signal to the monitor mixer (allowing for analog domain ZERO latency monitoring) and to the A/D converter, and the plug-in slides each take to the EXACT location on the timeline that it was recorded at, using a reading from a roundtrip playback/recording calibration. The plug-in also mutes the track’s channel during “record” takes and unmutes during playback.

You can figure out your offset in the same manner with your own roundtrip calibration: create a VERY short digital waveform – maybe zoom WAY in on a kick drum and cut everything after the very first peak. So this should sound like a digital glitch. Plug an output into your input, create a track, MUTE IT so you don’t get feedback, and record the glitch back into your computer. Do they line up? If not, there is an offset. Zoom way in and set your ruler to Samples – at 44.1k, 44 samples is about 1 ms. In Pro Tools, you can just click-drag horizontally between to get the exact length – the counter has “Start,” “End,” and “Length.” Whatever the “Length” says is your offset.

The only thing this offset thing doesn’t accomplish is that it doesn’t cure the input latency, if you’re monitoring through digital (DAW). Splitting the signal (or using multiple mics) and monitoring strictly in analog with a multi-input monitor controller or console (or cheap mixer) is the only way around this. And hitting “mute” for every take is a royal PITA. The biggest difference for me is for tracking background vocals – those instant adjustments for pitch fall into place more solidly with zero latency, and the usual solution of “take one earphone off” thing takes you out of any kind of soundstage. Our brains are not wired to really process different signals in each ear.